Introduction

The digital transformation of commerce has established online platforms as central arenas for retail activity, profoundly shaping how consumers discover and purchase goods. Amidst marketplace giants, a diverse ecosystem of platforms (including Shopify, WooCommerce, Adobe Commerce, BigCommerce, and PrestaShop) empowers millions of merchants globally. These platforms provide essential sales infrastructure, but in the highly competitive online environment, effective Digital Merchandising—the strategic presentation and organization of products—is paramount for capturing attention and driving conversion.

A critical aspect of digital merchandising is determining the order in which products appear on category and search pages. Two primary paradigms govern this: traditional Fixed Global Sorting, where merchants impose a consistent order (e.g., manual ‘featured’ placement, ‘best-selling’, price) across broad user segments, and Hyper-Personalized Recommendations. Driven by significant recent advances in AI and data analytics, hyper-personalization aims to dynamically tailor product rankings to individual user preferences, promising enhanced relevance.

While fixed global sorting offers merchants predictability and control—often facilitated through platform tools for manual arrangement or rule-based ordering—this ‘one-size-fits-all’ approach may not optimally cater to diverse consumer needs. Its effectiveness is primarily understood through established behavioral principles, framed by the concept of Choice Architecture. The design of the storefront intrinsically shapes user decisions. Global sorting heavily leverages the primacy effect, granting disproportionate visibility to top-listed items, but can also inadvertently exacerbate choice overload or misrepresent catalogue diversity if not carefully implemented. Furthermore, the default sort order acts as a powerful nudge within this choice architecture, significantly influencing user navigation and perception.

Evaluating the true impact of emerging hyper-personalization against these established global sorting techniques poses considerable challenges, particularly in simulating the complexity of personalization algorithms. These engines frequently represent users () and products () as embeddings in a finite-dimensional space , ranking items based on metrics like the dot product . Understanding the potential diversity of rankings generated by such systems is crucial. Concepts from statistical learning theory, particularly related to Vapnik-Chervonenkis (VC) theory and hyperplane arrangements, provide insight into the expressive capacity of these linear preference models. For products in (assuming general position), the maximum number of distinct rankings inducible by varying the user preference vector is theoretically bounded by . This number grows astronomically with typical e-commerce catalogue sizes () and embedding dimensions ().

If we assume that for a diverse catalogue and user base, the embedding process yields user and product vectors approximating a reasonably uniform or random distribution within , then the vast range of possible user preferences will likely generate a correspondingly vast array of distinct personalized rankings across the population. This sheer variability implies that, from an aggregate viewpoint, the presentation order might appear highly varied or ‘pseudo-random’.

This paper investigates the emergent sales outcomes resulting from distinct sorting strategies: Fixed Global Sorting versus Hyper-personalization. Our unique contribution analyzes these through the lenses of Choice Architecture and Digital Merchandising. Critically, we diverge from standard recommendation systems literature, which typically assumes personalization boosts engagement metrics like click-through rates (CTR). Instead, we hold interaction probabilities constant, isolating the impact of the sorting architecture on sales performance. Using a simulation model, we show that, under certain conditions, the hyper-personalized approach can yield superior sales outcomes compared to fixed global sorting, demonstrating the potential of hyper-personalization to enhance sales performance inside an increasingly competitive landscape.

This paper proceeds by outlining the simulation methodology, detailing the model’s structure, parameters, and the implementation of the two experimental conditions. We then present the simulation results, analyze the observed differences, and discuss the implications for Digital Merchandising strategy, considering the trade-offs between controlled global merchandising and the potential reflected by our personalization proxy, before suggesting directions for future research.

Methodology

Simulation Framework

In the domain of e-Commerce, a digital catalogue serves as the primary interface for customers to browse available items. This catalogue, denoted by , is composed of a set of products. Let the total number of distinct products be . Each product (for ) represents a unique item offered for sale.

Products are organized within a predefined category hierarchy structure to aid discovery. This hierarchy consists of levels. For each level , there are distinct categories. Each product is assigned to a specific category at each level of this hierarchy. This assignment is represented by a sequence , where is the identifier of the category assigned to product at level . Typically, these identifiers are integers such that . This sequence defines the product’s unique path through the hierarchy- with representing “Electronics,” representing “Laptops”, and representing “Gaming Laptops”.

Products can also exist in multiple variations, known as variants, which distinguish attributes like size, color, or configuration. Let denote the set of variants for product . Each specific variant (where ) has an associated stock quantity, , representing the number of units currently available. Thus, captures the specific purchasable forms of product and their availability.

Catalogue Generation

The generation of the synthetic catalogue involves specifying the properties for each of the products. For a single product , this involves determining its category assignment sequence, , and its set of variants, , along with their initial stock levels. The process follows these steps of Hierarchy Assignment and Variant Generation, which are detailed below.

Product Hierarchy Assignment

Given the predefined category hierarchy structure with levels and distinct categories available at each level , we generate the category assignment sequence for product , denoted . Each element (the category ID at level ) is determined by independently sampling from the available categories at that level:

This process assigns product to a randomly selected complete path through the category structure.

Product Variant Generation

Generating the variants for product involves determining their number and initial stock quantities.

The number of distinct variants for product , denoted , is sampled from a discrete uniform distribution, where is the maximum allowable number of variants per product:

For each of the variants, we first assign a unique identifier (for simplicity, we can let correspond to the index , where ). The initial stock quantity for each variant, , is then sampled independently from a Poisson distribution with rate parameter :

Conceptually, is the set of variant identifiers for product , and is a function mapping each to its non-negative integer stock level. For practical representation, this information is often stored as a collection of pairs: .

User Query Simulation

User interactions with the catalogue are simulated as queries arriving sequentially. Each query represents a user session aimed at finding products within a specific context, potentially leading to a purchase. The simulation of a single user query involves defining the query context, identifying relevant products, presenting them according to a specified sorting logic, handling user interaction (purchase attempt), and updating the system state.

Query Context Specification

Each query is defined by the user’s navigation choices: the category they browse and the page number they view.

Users may navigate through the category hierarchy (defined by levels with categories at level ). The simulated user selects a category path , where is the depth of the selected path. This path is determined probabilistically level by level: Starting at level , with probability , the user selects a specific category uniformly from the available categories at that level () and proceeds to level . With probability , the user stops refining the path at the current level, finalizing with length . If the user stops at , the path is empty (), indicating no category filter is applied.

Users browse products page by page. The requested page number () is sampled from a Geometric distribution with parameter , where is the probability of navigating to the next page: . This models diminishing likelihood of viewing subsequent pages.

The query context is thus defined by the pair .

Product Filtering

Based on the selected category path (of length ), the system filters the main catalogue to find matching products. A product with category assignment matches if its first category assignments match the query path. The filtered set is:

Let be the number of products matching the query filter.

Pagination

The system determines the total number of products, , potentially viewable by the user up to the requested page . This depends on the number of products displayed per page, :

This represents the size of the product pool from which the user’s view is constructed.

Product Presentation Logic (Sorting)

The core of Digital Merchandising lies in how products are ordered. We simulate two distinct sorting functions: the Global Fixed Sorting Function () and the Hyper-Personalized Sorting Function (). These functions determine the order in which products are presented to the user after filtering.

The Global Fixed Sorting Function () represents traditional merchandising where products are presented in a consistent, predefined order across all users (e.g., sorted by sales rank, price, or manual curation). Given the filtered set and the target count , this function returns the first products according to that global order.

where is the -th product in the global order within .

The Hyper-Personalized Sorting Function () aims to capture the essence of modern recommendation systems that tailor rankings to individual users. Such systems often embed users () and products () into a vector space and rank items based on similarity (e.g., ). As discussed in the introduction, VC theory suggests that even simple linear models can generate an large number of distinct rankings ( for products in ). If user preferences () and product attributes () are sufficiently diverse across the population and catalogue, the resulting personalized rankings for different users will vary significantly. Simulating individual user vectors () and optimizing rankings for each query is computationally intensive. Given the theoretical potential for extreme variability in rankings across users, we use a pseudo-random presentation as a computationally tractable proxy for the aggregate effect of hyper-personalization, by selecting products uniformly at random without replacement from the filtered set .

User Interaction and Purchase Attempt

Once products are presented, we simulate potential user actions. To simulate an action we assume the user has an implicit preference for a specific variant characteristic, represented by a preferred variant ID , as in the product listing the user is presented with the Product and not a Variant, the specific Variants and their availability to the user is only known to the User after clicking on the product. This Variant preference is sampled uniformly from the set of all possible product variants using:

For a product potentially considered by the user a purchase takes place if the following conditions are met:

- A click occurs (with probability ),

- If clicked, the user has purchase intent (with probability ),

- The preferred variant is offered for product , and

- There is available stock, denoted , for the preferred variant .

When a successful purchase of product occurs via its specific variant (corresponding to the user’s preferred variant ID ), the system state is updated immediately to reflect the transaction and manage availability:

- Decrement Stock: The available stock for the purchased variant is reduced by one:

- Variant Availability Update: If the stock reaches zero due to this purchase, this specific variant becomes unavailable and cannot satisfy future purchase attempts requiring it.

- Product Availability Update: After the variant stock is updated, the system checks if all variants of product now have zero stock. If all variants are out of stock, the entire product is marked as unavailable and removed from the catalogue. This ensures that the product is no longer presented in future queries.

Analytical Expectations and Factors Influencing Sorting Divergence

In this section, we analyze conditions under which the hyper-personalized sorting function produces meaningfully different product rankings compared to fixed global sorting. Specifically, we examine the expected sizes of the filtered product set and the displayed product set to characterize scenarios where:

We first establish the expected size of the filtered catalogue, . Given the catalogue size and the probabilistic nature of user navigation through the hierarchical category structure, we start by considering the number of possible category-based partitions at various depths. The maximum number of leaf categories in a hierarchy with depth is defined as:

where is the number of distinct categories at hierarchy level . However, because users probabilistically choose their stopping depth based on the parameter , the expected number of effective leaf categories visited by users is given by:

Here, the term represents the probability that users stop their navigation precisely at level . The expected size of the filtered catalogue can thus be expressed as:

Next, we calculate the expected number of products displayed to users, . Given users browse pages according to a geometric distribution with continuation probability and page size , the expected number of viewed products across all pages is:

For meaningful differentiation between the sorting methods to emerge, the displayed product set must be strictly smaller than the available filtered set, providing room for variation in sorting outcomes. Therefore, a necessary condition for hyper-personalization to differ substantially from global sorting is:

This inequality implies that the number of products users view must be sufficiently limited relative to the filtered catalogue size, enabling personalized sorting to meaningfully vary product presentation between users. If this condition is not satisfied, the hyper-personalized sorting will closely resemble the global sorting, reducing personalization’s potential benefits. Understanding this constraint is critical when evaluating the strategic value of hyper-personalization within digital merchandising contexts.

Null Hypothesis

We investigate whether the choice of sorting strategy—Fixed Global Sorting versus Hyper-Personalized Sorting—significantly influences the cumulative number of purchases, denoted by , as a function of the number of user queries .

Let:

- be the cumulative number of purchases after queries.

- be the expected cumulative purchases under the Fixed Global Sorting condition.

- be the expected cumulative purchases under the Hyper-Personalized Sorting condition.

The hypotheses are defined as follows:

- Null Hypothesis ():

The sorting strategy does not affect the cumulative purchases, meaning the expected purchase trajectories are identical for both conditions: Under , any observed differences in are attributed solely to random variation.

- Alternative Hypothesis ():

There exists at least one query count for which the expected cumulative purchases differ between the two sorting strategies:

Statistical Approach: Bootstrapping Uplift

Due to the complex, path-dependent nature of our simulation outputs and the absence of an analytical distribution for cumulative purchases, we employ a non-parametric bootstrap approach. This method directly estimates uncertainty and constructs confidence intervals for the relative uplift in cumulative purchases between the two sorting strategies.

Let:

- denote the cumulative number of purchases after queries for the -th run under the Fixed Global Sorting condition.

- denote the cumulative number of purchases after queries for the -th run under the Hyper-Personalized Sorting condition.

Assume that independent simulation runs are available for each condition. The bootstrap procedure is as follows:

Resampling: Perform bootstrap iterations (e.g., ). In each iteration :

- Draw, with replacement, a simulation run from the Global Sorting runs to obtain the trajectory .

- Draw, with replacement, a simulation run from the Hyper-Personalized Sorting runs to obtain the trajectory .

Uplift Calculation: For each bootstrap sample and each query point where , compute the relative uplift:

This produces bootstrap replicates of the uplift time series .

Empirical Distribution: The collection forms the empirical bootstrap distribution for the relative uplift at each query point .

Confidence Interval Estimation: For each , determine the 5th and 95th percentiles of the bootstrap distribution, denoted and , respectively. These percentiles define a 90% confidence interval:

The confidence intervals for are used to evaluate our null hypothesis at each query point :

- If does not include zero, we reject at the significance level for that query point, indicating a statistically significant difference in cumulative purchases between the two sorting conditions.

- If includes zero, we fail to reject , suggesting that the observed uplift is not statistically distinguishable from zero given the simulation variability.

Consistent rejection of over a relevant range of provides robust evidence that the sorting strategy exerts a persistent and statistically significant impact on purchase dynamics. Visualizing the mean relative uplift along with its bootstrap confidence intervals over offers a clear graphical assessment of the magnitude, duration, and significance of the sorting effect.

Parameter Space

The simulation experiments were conducted using a fixed set of parameters that define the synthetic e-commerce environment, the characteristics of simulated user behavior, and the simulation duration. The catalogue consists of products, which are organized within a three-level hierarchy. At the first level, there are categories; and at the second level, each top-level category is subdivided into sub-categories. This structure yields a total of distinct leaf categories. Each product is assigned a number of variants drawn from a discrete uniform distribution on , with representing the maximum possible variants per product. The initial stock level for each variant is determined by sampling from a Poisson distribution with mean .

User behavior in the simulation is modeled through several probabilistic parameters. The paging probability is set to , implying that the expected number of pages viewed per query is . In addition, a hierarchy filtering probability of is used to simulate the likelihood that a user applies a category filter at each successive level. The click-through rate for products is , and conditional on a click, the purchase intent rate is .

Each simulation run consists of sequential user queries, with products displayed per page. Key simulation metrics, such as the cumulative number of purchases, are recorded at regular intervals of 1,000 queries.

The core experimental variable is the product sorting strategy, controlled by the Boolean parameter . In the control condition (), products are presented using the fixed global sorting function, denoted . In contrast, the treatment condition () employs the hyper-personalized sorting function, denoted . This parameter set establishes the context within which the impact of the sorting strategy on cumulative purchases and other performance metrics is evaluated.

Results

The simulation was executed for 100 independent runs under each sorting condition, yielding a total of 200 simulation outputs. We aggregated these results to analyze the cumulative number of purchases, , over time and to examine differences between the fixed global sorting condition (FGS) and the hyper-personalized sorting condition (HPS).

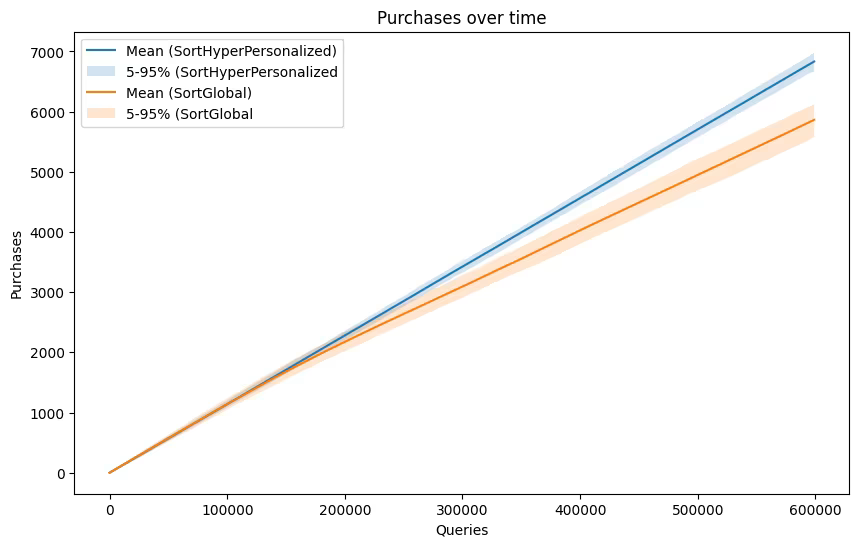

Figure 1 plots the mean cumulative purchases as a function of the number of user queries. The solid lines indicate the average trajectory across the 100 runs for each condition, while the shaded areas represent the 90% empirical confidence intervals (from the 5th to the 95th percentile) at each query point. As expected, cumulative purchases increase monotonically with the number of queries in both cases. However, a clear divergence emerges early: the mean trajectory for the hyper-personalized condition, , consistently lies above that for the fixed global sorting condition, . In other words, on average, more purchases occur when products are not presented in a fixed global order.

Furthermore, the 90% confidence intervals for the two conditions show minimal overlap beyond the first 10,000–20,000 queries. In many instances, the lower bound for the hyper-personalized condition closely approaches or even exceeds the upper bound for the fixed global sorting condition, suggesting that the difference in cumulative purchases is statistically significant.

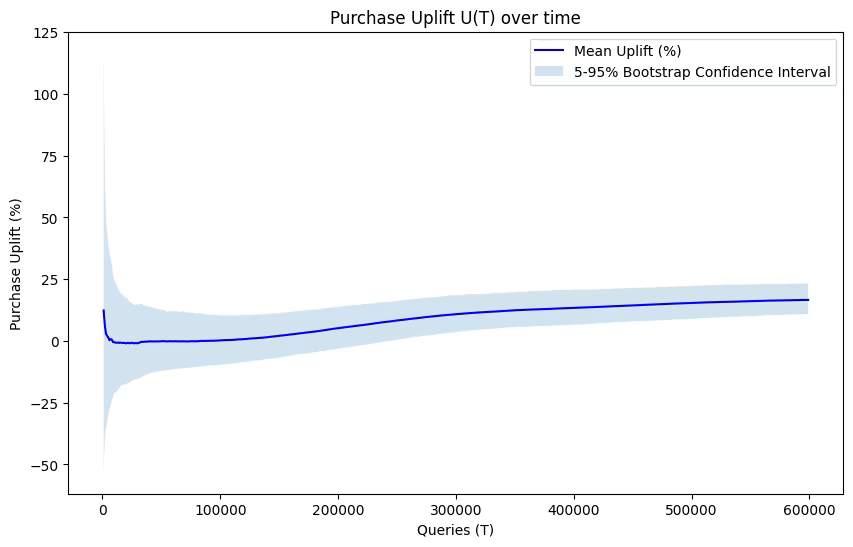

To formally assess and quantify the impact of the sorting strategy over time, we performed a bootstrap analysis. Figure 2 illustrates the mean percentage uplift in cumulative purchases, defined as

along with the 90% bootstrap confidence interval derived from 10,000 bootstrap samples. The uplift is initially near zero but quickly becomes negative, reaching a maximum magnitude of approximately around 500,000–600,000 queries.

Importantly, the 90% confidence interval for remains entirely below zero after the first few thousand queries. According to our pre-defined decision criteria, this consistent exclusion of zero leads us to reject the null hypothesis at the significance level. These results provide strong evidence that the fixed global sorting strategy results in a statistically significant reduction in cumulative purchases compared to the hyper-personalized approach.

The dynamic nature of the uplift suggests that the negative impact of fixed global sorting is most pronounced during the early-to-middle stages of user interaction. This pattern is consistent with the hypothesis that a fixed sorting order accelerates the depletion of high-visibility items, thereby reducing the likelihood of subsequent purchases. As the simulation progresses and inventory constraints tighten, the relative disadvantage of fixed global sorting diminishes, although it remains statistically significant throughout the observed timeframe.

Conclusion

In this study, we used a hierarchical simulation model to examine how two product sorting strategies—Fixed Global Sorting versus Hyper-Personalized Sorting—affect cumulative purchase volume, denoted by , in a simulated e-commerce environment. Our model incorporated key elements of online retail, including multi-level product hierarchies, product variants with depletable stock, and probabilistic user behaviors related to browsing and purchasing.

Empirical findings from 100 simulation runs per condition reveal a clear and statistically significant divergence between the two strategies over 100,000 user queries. Specifically, the Fixed Global Sorting condition (with ), which yields cumulative purchases , consistently resulted in lower purchase volumes compared to the Hyper-Personalized Sorting condition (with ), which produces . A bootstrap analysis confirmed this difference: the relative uplift,

was statistically negative for the vast majority of the simulation period at an significance level. The negative uplift was most pronounced early on—reaching roughly —and then gradually diminished, approaching about by the end of the simulation.

These results challenge the notion that a fixed global sort order inherently improves sales performance. Although such sorting can enhance the visibility of top-ranked items through the primacy effect, it may also accelerate the depletion of popular product variants, resulting in missed opportunities later when key inventory is exhausted. In contrast, a Hyper-Personalized Sorting strategy, by more evenly distributing product visibility, appears to preserve inventory and ultimately drive higher cumulative purchases.

The implications for e-commerce practitioners are significant. Relying solely on fixed global sorting strategies may inadvertently restrict sales by prematurely depleting high-demand items. Our findings underscore the need for more dynamic, data-driven merchandising approaches that account for factors such as inventory levels, product diversity, and nuanced user preferences. Future work should extend this analysis by exploring different parameter settings and incorporating additional performance metrics, such as revenue or long-tail discovery, to provide a more comprehensive view of sorting strategy impacts.

In summary, this simulation study demonstrates that deliberate product ordering does not guarantee improved performance. Instead, a hyper-personalized approach may offer a more effective means of sustaining sales over time, highlighting the importance of adaptive merchandising strategies in the evolving landscape of online retail.