The problem with text

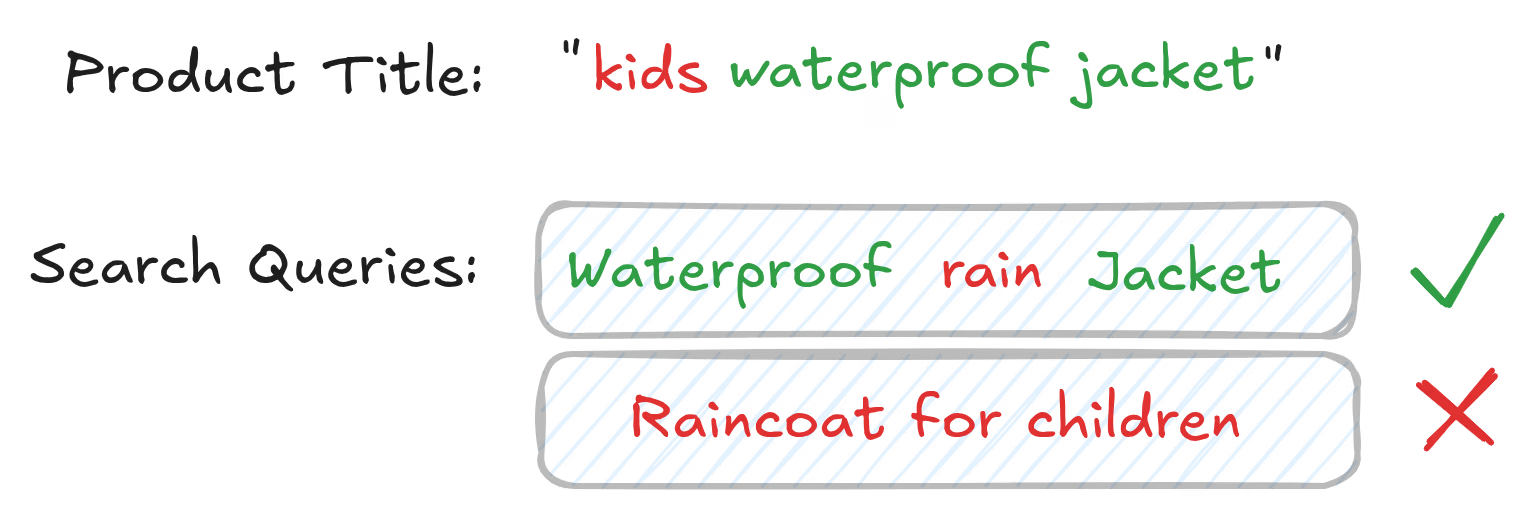

Traditionally when you use the search feature to find a new pair of shoes on your favourite clothing site, or a piece of furniture on a homeware site, the text in your search query is matched to the text in the titles, descriptions, etc., of the products on the site. This approach is simple, efficient, and has worked well enough in the past. However, there are many ways to describe the same product, and simple text-matching completely ignores this semantic complexity.

Okay, let’s assume for now that we’ve replaced our simple text-matching algorithm with a more advanced one; a state-of-the-art LLM-based search algorithm that understands the semantic meaning of words and phrases. We still have a problem: we’re ignoring an incredibly important component associated with every product in an online storefront - images!

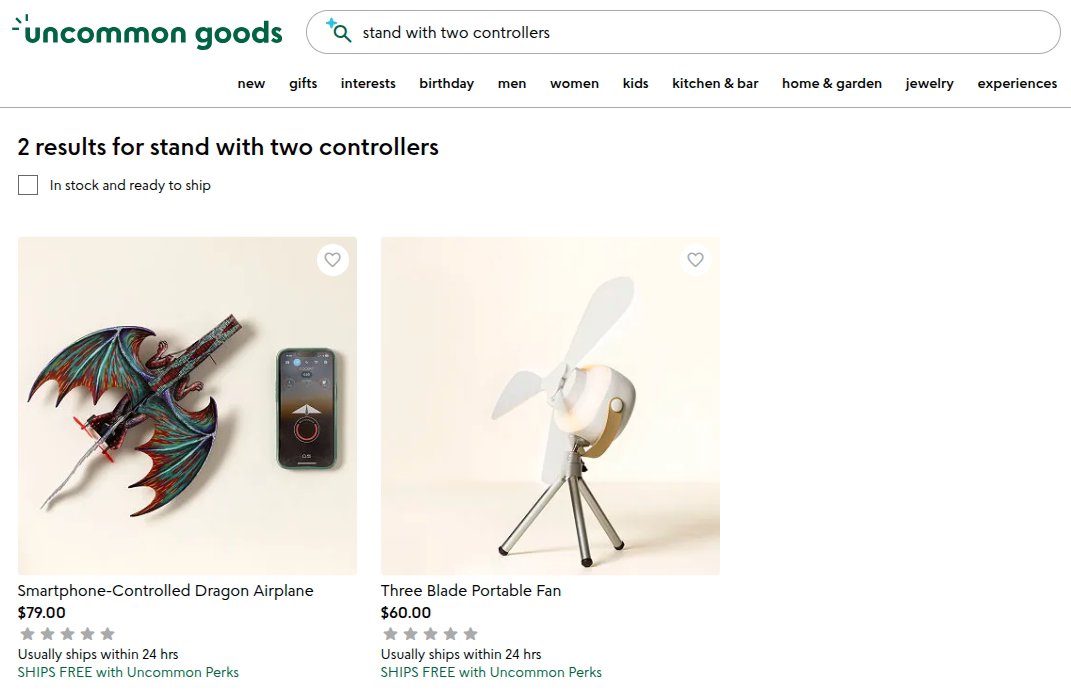

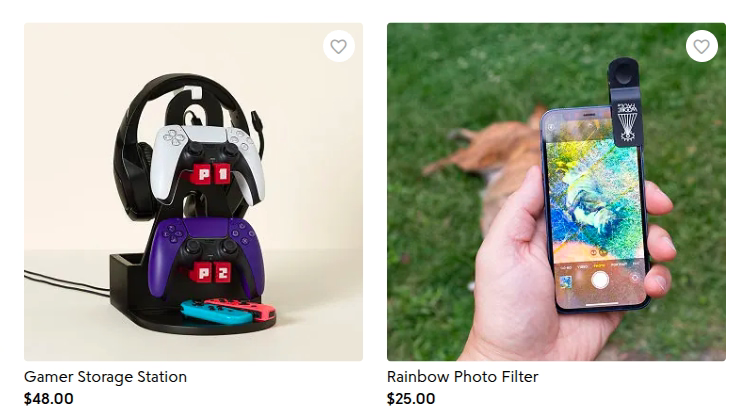

Unfortunately, text data will never be enough to fully describe all the visual components of products, leading to scenarios like this one, where our search query returns no results even though a product matching that description exists on the site.

In an environment where users have to sift through exponentially larger product catalogues, relying solely on text to describe products is no longer sufficient. Users are looking for products that match their intent, and this intent is often expressed visually.

But images aren’t easy

How do we extract valuable information from complex image data? One approach used by many search providers, like Klevu and Algolia, is to simply classify or tag products in your catalogue using an upstream image classifier model. These text-based tags can then be searched over using text-matching algorithms or used for grouping or filtering products.

This is a quick-and-dirty solution to a much bigger problem. Only so much visual information can be captured by the limited range of simple tags a classification model can output. While this is a useful way to add metadata to products, it’s incredibly limited when it comes to enabling multimodal discovery.

Introducing CLIP

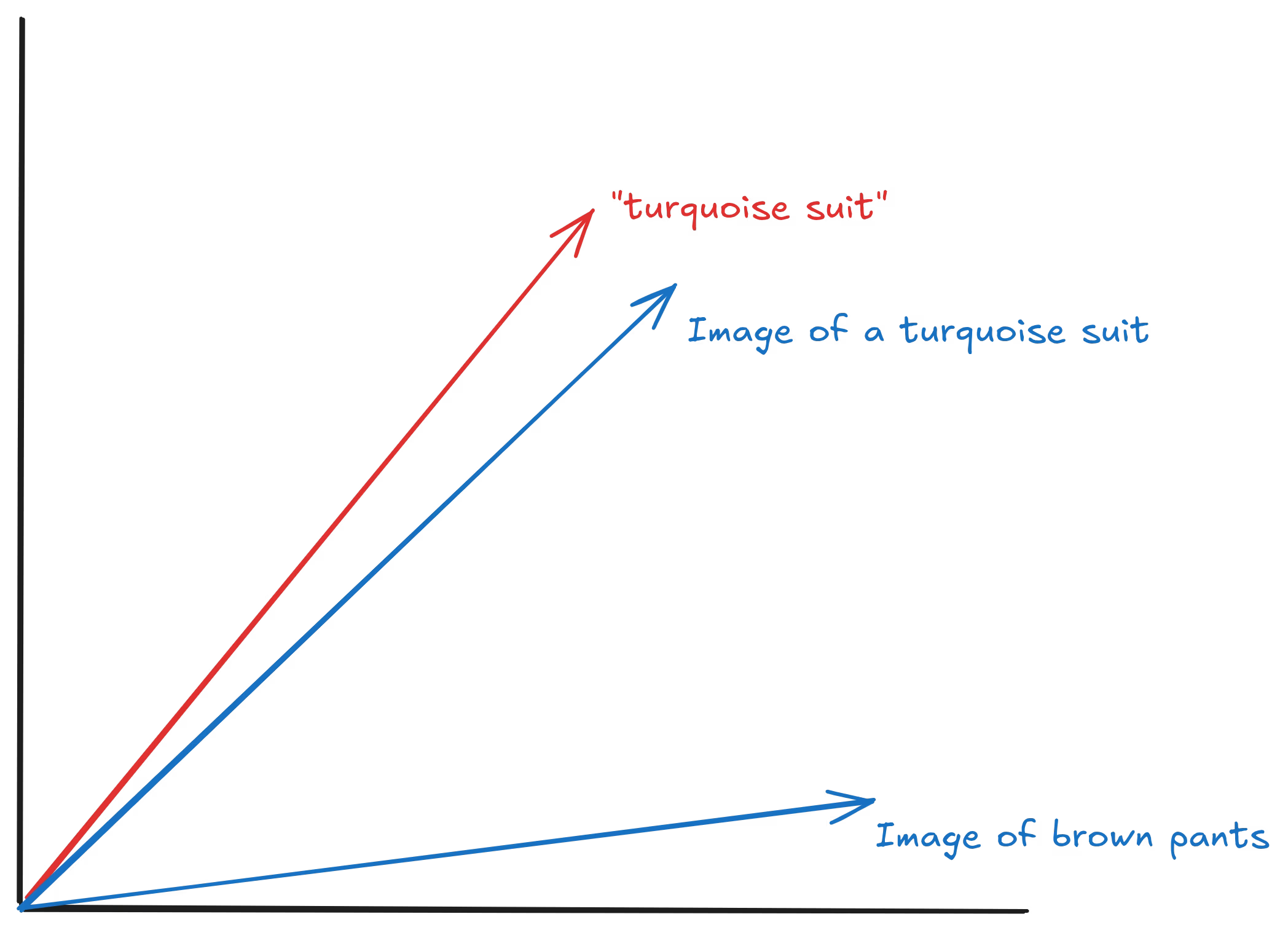

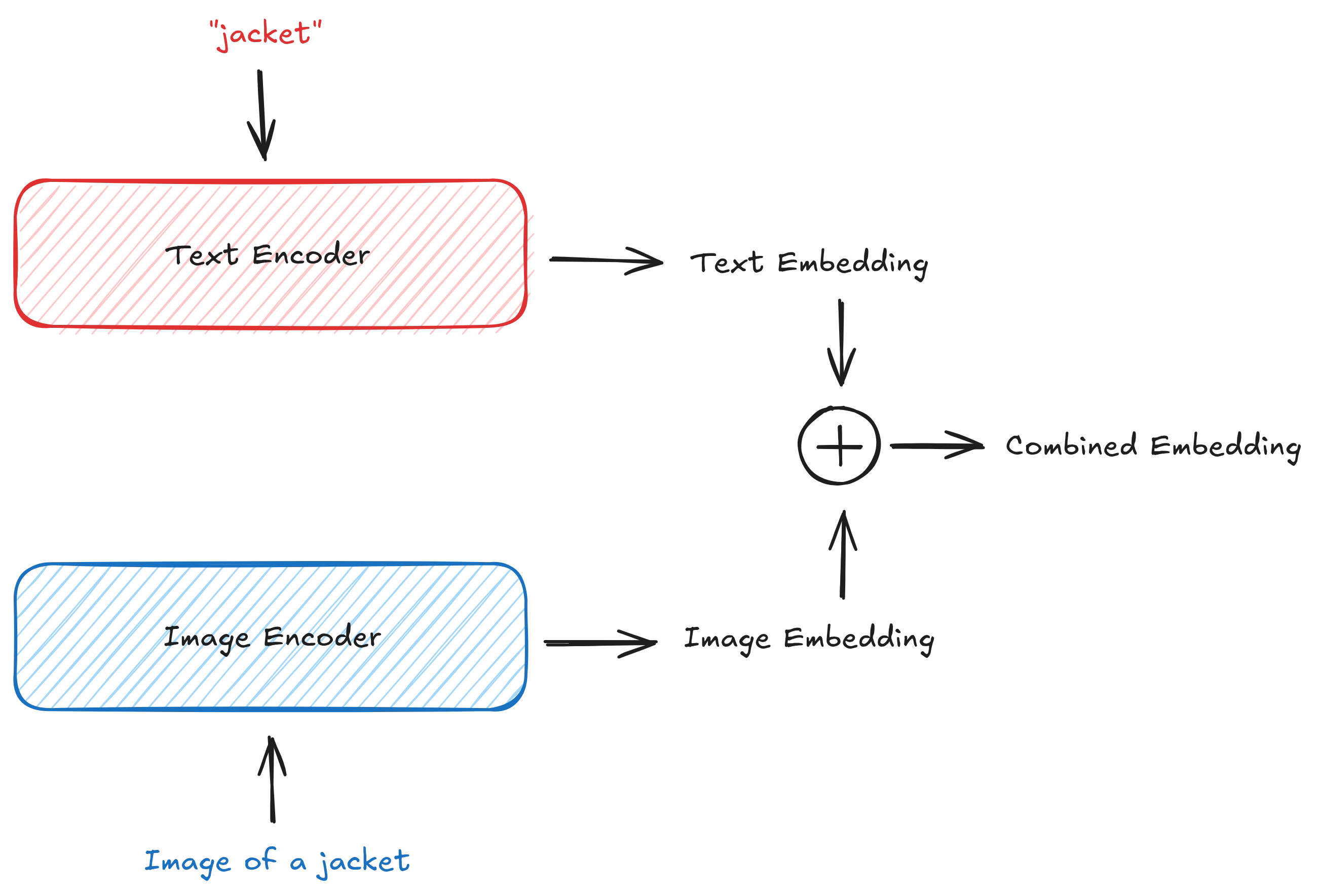

In 2021, OpenAI released their CLIP model, trained to map images and text into the same embedding space. This means that a piece of text and an image that represent semantically similar concepts will result in vectors that are close together in that space.

Perfect, we can now search for a product and get results which are semantically similar using the product’s image data! Search providers quickly adopted CLIP, or one of the many variants it spawned, to provide “multimodal” search to their customers. This completely changed the way we think about product discovery. Instead of engineering the perfect search query, users can simply describe visual components of the product they are looking for, and the model will return results that match that intent.

Storing multimodal representations of products has other benefits too - for example, we can recommend similar products based on their visual and textual features by simply finding the nearest neighbours in the embedding space. This is a powerful way to provide users with a more personalised experience, and doesn’t suffer from the cold start problem that traditional recommendation systems do.

What if we could do better?

There is a fundamental problem with CLIP, which is that it processes image and text data separately, rather than processing them as a single “interleaved” input where pieces of the text can “attend” to patches of the image.

Because the text encoder isn’t “aware” of the image, and the image encoder isn’t “aware” of the text, information regarding specific parts of both inputs can get lost.

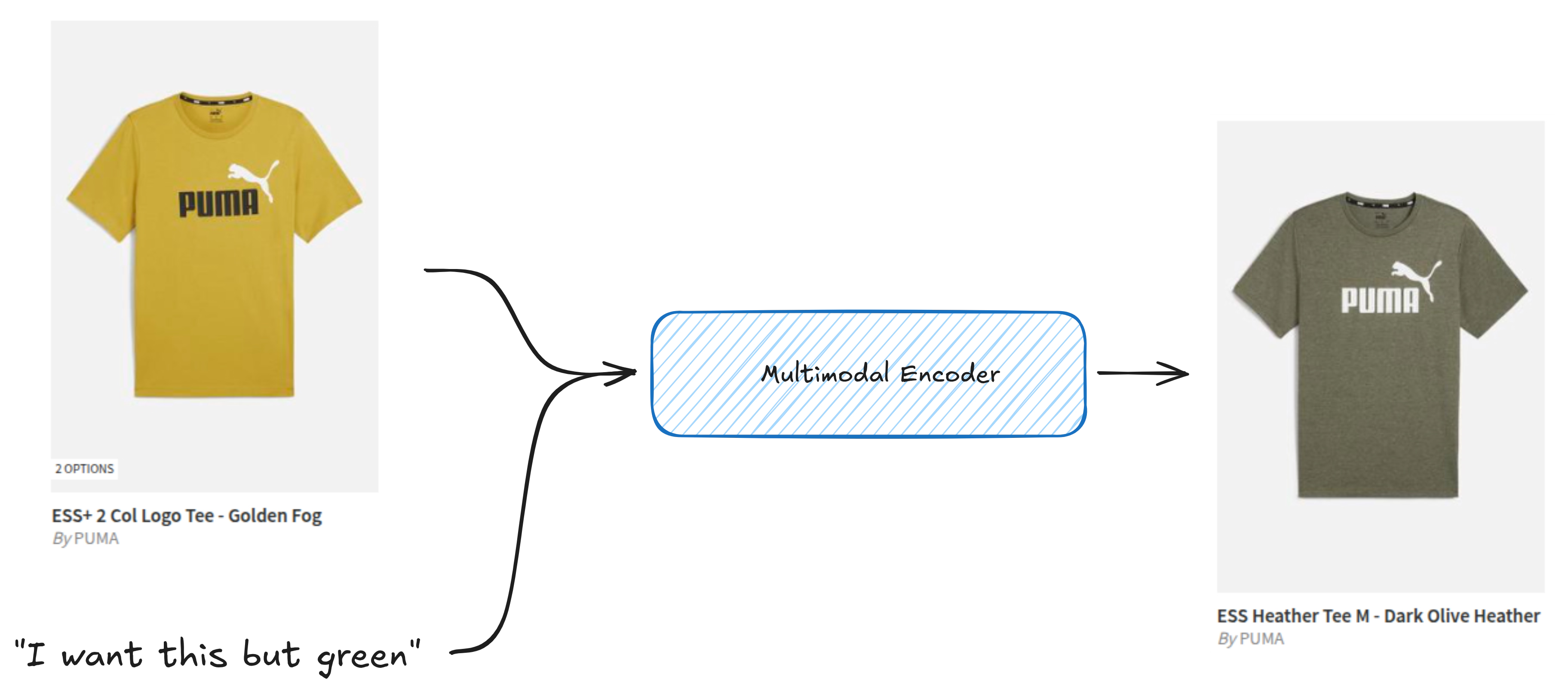

This limits the potential use cases of these models, like composed image retrieval, where an image and a query are combined to produce a specific intent, for example, “a red version of this shoe” or “this cup but without text on it”.

This requires the model to relate specific patches of the image to specific words in the query, details which could be diluted when using a dual-encoder architecture like CLIP. Common embedding models like Jina, Nomic and Marqo, used in many downstream tasks, inherit this late-interaction constraint.

The future of e-commerce

In recent years we’ve seen a huge wave of innovations in the space of not only LLMs and visual embedding models, but also multimodal models, which have the potential to completely transform how we interact with e-commerce sites. Our own internal benchmarks show that while text is important, sites can see 50% gains on real-world search queries when using multimodal models. These changes are exciting, and models like CLIP have gone a long way in proving its value, but we are far from realising its full potential. We need to be critical of current approaches and their limitations, and explore new avenues where we can. To change how users discover, we first need to show them what’s possible using true multimodal models.